| WikiProject Brands | (Rated Start-class, Low-importance) | |||||||

|---|---|---|---|---|---|---|---|---|

| ||||||||

| WikiProject Computing / Hardware | (Rated Start-class, Low-importance) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| ||||||||||

- 4Naming confusions

GT240 is VP5, not VP4![edit]

At http://en.wikipedia.org/wiki/Nvidia_PureVideo#The_Fifth_Generation_PureVideo_HD the GT240 is listed.

NVIDIA Purevideo Decoder Activation Issues; Is there a trial for 3DTV Play? NStantMedia screen saver always says trial version. Where do I download the NVIDIA Purevideo Decoder? I have installed the 3DTV Play Activation Utility and I cannot purchase 3DTV Play or enable the Trial. After 30 days, you can unlock and buy the software by launching the shortcut 'Unlock/Buy NVIDIA PureVideo Decoder' on the desktop. Release Notes. Support for High Definition (HD) inverse telecine on compatible GPUs. See /page/purevideo.html for a list of supported GPUs.

Read http://www.nvidia.com/object/io_1260507693932.html—Preceding unsigned comment added by 95.91.113.66 (talk) 01:01, 18 January 2010 (UTC)

'Certified'?[edit]

I don't know if the PureVideo HD capable hardwares are actually 'certified' for Blu-ray and HD-DVD playback, but it's a fact that PureVideo HD hardwares does hardware acceleration to videos with the same encoding (MPEG2, MPEG4, VC-1, H.264) as most of the Blu-ray/HD-DVD video streams. Though most of the computers (Pentium 4 class or above) also have the ability to decode video stream smoothly (through CPU) without the assistant of video cards, so is the term confusing?...Yeah, you're probably right. From a vendor's standpoint, NVidia's product guidelines dictate the minimum feature-set/characteristics for PureVideo-HD label/seal. (For example, Geforce 7600GT or better, HDCP, 256MB video RAM, etc.) But whether Nvidia handles the program with full-blown compliance-testing, or simply 'rubber-stamps' the OEM's submission, I don't know.

...I think a category should be added for 'Purevideo software', since that's ANOTHER point of confusion. The add-on Purevideo software is basically just an MPEG-2 video player (since XP doesn't come with an MPEG-2 decoder), with some extra fancy post-processing modes not available to third-party players like PowerDVD, WinDVD, etc. If you're only interested in the hardware-acceleration, then any third-party DVD-player (PowerDVD, WinDVD, Nero Showtime, etc.) is sufficient -- the hardware-decoding is accessed through a public-API called DirectX-VA.

For HD-DVD/Bluray playback, the Purevideo software isn't involved at all (so purchasing it is unnecessary.) WinDVD-HD, PowerDVD_HD, and Nero HD-DVD plugin talk directly to the ATI/NVidia display-driver (using DirectX-VA.)

...I would love to see a video chipset/purevideo version comparison matrix chart. —Preceding unsigned comment added by 72.208.26.142 (talk) 05:22, August 26, 2007 (UTC)

Fair use rationale for Image:Nvidia purevideo logo.png[edit]

Image:Nvidia purevideo logo.png is being used on this article. I notice the image page specifies that the image is being used under fair use but there is no explanation or rationale as to why its use in this Wikipedia article constitutes fair use. In addition to the boilerplate fair use template, you must also write out on the image description page a specific explanation or rationale for why using this image in each article is consistent with fair use.

Please go to the image description page and edit it to include a fair use rationale. Using one of the templates at Wikipedia:Fair use rationale guideline is an easy way to insure that your image is in compliance with Wikipedia policy, but remember that you must complete the template. Do not simply insert a blank template on an image page.

If there is other fair use media, consider checking that you have specified the fair use rationale on the other images used on this page. Note that any fair use images uploaded after 4 May, 2006, and lacking such an explanation will be deleted one week after they have been uploaded, as described on criteria for speedy deletion. If you have any questions please ask them at the Media copyright questions page. Thank you.

BetacommandBot (talk) 17:22, 5 December 2007 (UTC)

This page is extremely windows centric. If this is a windows only product that should be noted in the page. —Preceding unsigned comment added by 74.71.245.199 (talk) 00:21, 6 March 2008 (UTC)

Naming confusions[edit]

I do not know any rules for editing Wikipedia articles, so instead I will write here and hopefully someone in the future will read this and change the article.

There is only PureVideo™ and a superset PureVideo™ HD. The headings Purevideo1, Purevideo2 and Purevideo3 are incorrect and need to be removed or replaced with the broader heading of simply PureVideo.

PureVideo features are dynamic, with additional functions added within the video driver and also improved/additional hardware on the video card. When nvidia released the G84/G86 video cards with a faster video processor (VP2), this was dubbed PureVideo 2 by some media and review sites. In this sense I guess the term PureVideo 2 colloquialism deserves at least a mention. The additional PureVideo hardware on the G84/G86 video cards is not linked solely to the video processor (VP2), but also relies on the bit stream processor (BSP) and encryptor (AES128).

Here is a link to an article which better describes the additional features on G84/G86 video cards.http://anandtech.com/video/showdoc.aspx?i=2977

Currently the wikipedia article seems to be confusing VP2, VP3 with PureVideo2,3

If the advances in hardware features and/or additional driver functions needs to be distinguished then it could be shown as PureVideo Generation 1,2,3 etc to show major advances but not to ambiguously imply a change in the trademark name or additional versions of PureVideo. I feel a chart would be simpler, such as linked to on the nvidia site in the references, which shows features corresponding to hardware. The corresponding date or driver version which exposed the feature could be shown separate. Here's a link to the product comparisons.http://www.nvidia.com/docs/CP/11036/PureVideo_Product_Comparison.pdf

An example of the confusion can be seen under the heading PureVideo 3.In it's current state, the article implies a hardware change beginning with 9600GT cards, which is ambiguous. The additional features (Dynamic Contrast Enhancement • Dynamic Blue, Green & Skin Tone Enhancements • Dual‐Stream Decode Acceleration. • Microsoft Windows Vista Aero display mode compatibility for Blu‐ray & HDDVD playback) are all driver enhancements enabled in Forceware version 174 which can be exposed with previous hardware also.

Here's a review which explains PureVideo's implementation is identical on 9600gt and 8800gt. http://www.tomshardware.com/reviews/nvidia-geforce-9600-gt,1780-4.html

Here's a link to driver release notes which show additional features in release 174 (page 3,4) http://us.download.nvidia.com/Windows/174.74/174.74_WinVista_GeForce_Release_Notes.pdf

VP2/VP3 controversy[edit]

To date, there is no video cards with a VP3 video processor, although it is proposed for the future. When these video cards are released it will not automatically be 'purevideo 3'. —Preceding unsigned comment added by 203.59.18.120 (talk) 23:02, 23 September 2008 (UTC)

...At least one Nvidia developer disagrees about the (non)-existence of VP3. Introduced with the 55nm/66nm NVidia GPUs, VP3 adds some new documented hardware, and some undocumented -- It's most certainly more than just new driver-caps on previous (8800GT) hardware. See this discussion-thread http://episteme.arstechnica.com/eve/forums/a/tpc/f/8300945231/m/732002115931/p/2—Preceding unsigned comment added by 68.122.48.253 (talk) 01:11, 23 October 2008 (UTC) You might be right about the 9600GT (G94) and 8800GT (G92) both being VP2, but the newer laptop and desktop Geforce 9300/9400/9500 line are definitely VP3-class GPUs.

---My 2 cents...NVidia may not trademark 'Purevideo2' or 'Purevideo3', but NVidia's marketing has done nothing to stamp out the confusion that all their partner websites, advertising copy, etc. Why doesn't an anonymous Nvidia employee could clean up the main-page and put the RIGHT information there? —Preceding unsigned comment added by 71.103.25.50 (talk) 17:15, 26 September 2008 (UTC)

Supported software ?[edit]

What software supports PureVideo ? I downloaded a HD video from nvidia, and the CPU usage while playing in WMP is pretty high. Does WMP use PureVideo ? Do other players ? Is there a list ? Is there a way to test if PureVideo is used ?--Xerces8 (talk) 08:31, 2 October 2008 (UTC)

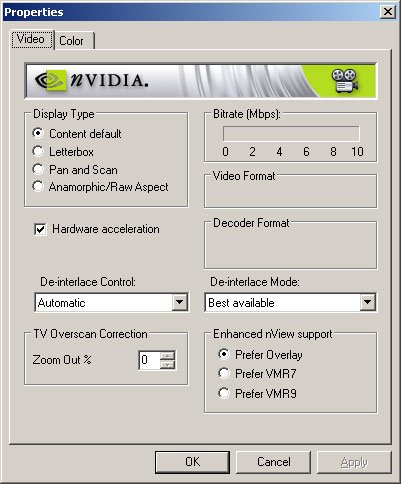

- If you have other decoder installed, use this to select the default decoder. What's your video card? If it's not one of the supported NVidia video cards, then the PureVideo would simply be a software decoder (using CPU). --Voidvector (talk) 16:26, 2 October 2008 (UTC)

- The card is 8800GT. --Xerces8 (talk) 09:47, 3 October 2008 (UTC)

H.264 Level[edit]

In the second generation paragraph, it currently says 'VP2 capable cards are able to decode H.264 High@L5.0'.I seriously doubt this (imo level4.1 is the 'guaranteed' limit and that is what the article says as well) and will remove the sentence if no source (other than xbmc forum) can be added. --CE 62.178.80.242 (talk) 09:48, 30 April 2009 (UTC)

- Though this isn't conclusive, I tried to play some sample 1080p60 AVC clips recorded by the Panasonic HS700 AVCHD camcorder. (The 1080p60 recording-mode isn't AVCHD compliant, it's a special proprietary mode using the same H264/AC3 codecs at a higher bitrate.) In both Nero Showtime 9 (Windows Vista/32 on a NVidia Quadro NVS160M), and Windows 7 Ultimate (Nvidia 9800GT), the clip played in slow-motion. Nero's info display said the MPEG4-file was 'Level 4.2' The frame-rate counter in Nero showed a playback rate of ~45fps (somewhat lower than 60fps.) If I turned off hardware-acceleration in Nero, then the clip played at full-speed (60fps), but used more CPU-time. I downloaded the 1080p60 sample-videos from here:

http://www.avsforum.com/avs-vb/showthread.php?t=1225613&page=5 (links checked on 03/22/2010) —Preceding unsigned comment added by 99.182.67.114 (talk) 14:01, 24 March 2010 (UTC)

- Information about Level 5 removed. --Regression Tester (talk) 01:02, 25 March 2010 (UTC)

Nasty advertisement of Media Player Classic[edit]

Not only Windows Media Player is not mentioned but MPC directly uses its renderer. --195.74.250.81 (talk) 03:58, 14 May 2010 (UTC)

- Well you're wrong there. If you're referring to renderers MPC-HC supports several different ones, including standard windows ones (like EVR and VMR) most or all of which may be used by, but can't said to be part of WMP (they are a part of Windows, this is particularly the case for EVR but I believe you'd find VMR-9 on Windows XP N editions for example provided you have the appropriate version of DX). The more important thing here isn't renderers but codecs. MPC-HC also has internal codecs which support Nvidia PureVideo. WMP on the other hand doesn't support PureVideo by itself, for licensing reasons they don't distribute such codecs with standalone WMP. Newer versions of Windows in some variants come with codecs which support PureVideo this is already mentioned in the article. You can also use other codecs like the Nvidia ones in WMP. But of course any software which support DirectShow codecs or Media Foundation codecs in new versions of Windows should work with PureVideo DS or MF codecs provided you meet the other requirements (in Vista and Windows 7, the EVR rendered is needed), this doesn't have to be stated. P.S. Do note MPC and MPC-HC while sharing the same original code base have diverged from each other a fair bit Nil Einne (talk) 16:01, 16 May 2010 (UTC)

The only renderers that can play Purevideo are Microsoft. This is the Purevideo article. --195.74.250.81 (talk) 17:57, 16 May 2010 (UTC)

- That depends what you mean by Purevideo. One of the problems is it isn't really defined, and it's arguablly not really possible to define since Nvidia doesn't. Is PureVideo solely the hardware acceleration functionality or do you only call it PureVideo when access is provided via certain methods? In other words, if you access the hardware decoding functional via VDPAU is that PureVideo? Or only if you access it via DirectX Video Acceleration? What about if you access the hardware decoding functionality via CUDA (e.g. as with CoreAVC)? If you include either or both of the first and last, then clearly you don't need a Microsoft renderer. Even if you restrict it to DXVA, while EVR or VMR & overlay are required depending on OS, and these can indeed probably be called Microsoft renderers, this isn't surprising since DXVA is a Microsoft component and they are also best described as (core) components of Windows that are used by (as they are intended to be used by) many Windows programs including WMP and MPC-HC along with a host of other programs. Of course as I pointed out above, whatever renderer you use, you also need an appropriate codec which WMP doesn't generally provide by itself but MPC-HC does. As I mentioned these are available with certain versions of Windows, and this is already described in the article. Interesting enough these codecs are apparently even included in appropriate N versions of Windows 7 if I understand [1] correctly although as Media Foundation apparently is not, I'm not sure if any program can use them. Regardless do note that N editions exclude 'Windows Media Player or other Windows Media-related technologies' not just WMP and Media Foundation is listed seperately from WMPanyway. Of course the distinction between WMP and Windows components is not a clearcut one because of the way Microsoft handles things anyway however the article already adequetly describes the situation, so I don't see any need to include WMP. Nil Einne (talk) 23:46, 16 May 2010 (UTC)

Full protected[edit]

This page has been fully protected. A fully protected page can be edited only by administrators. The protection has been currently placed for three months. The 'Edit' tab for a protected page is replaced by a 'View source' tab, where users can view and copy, but not edit, the wikitext of that page.

Any modification to this page should be proposed here. After consensus has been established for the change, or if the change is uncontroversial, any administrator including I may make the necessary edits to the protected page. To draw administrators' attention to a request for an edit to a protected page, place the {{editprotected}} template on the talk page.

All requests to unprotect this page may be submitted at the page meant for such requests. Please get in touch directly with me on my talk page for any clarifications. Wifione ....... Leave a message 18:20, 23 March 2011 (UTC)

Edit request from Hajj 3, 13 April 2011[edit]

This edit request has been answered. Set the |answered= or |ans= parameter to no to reactivate your request. |

Nvidia GT 520 was released today April 2011 not January 2011 as stated in the table, please correct this.Hajj 3 (talk) 12:43, 13 April 2011 (UTC)

- iirc, this error (or a similar one) was one of the reasons that lead to the revert-war.--Regression Tester (talk) 14:27, 13 April 2011 (UTC)

- Please provide evidence of a consensus for this change. Woody (talk) 21:41, 15 April 2011 (UTC)

Edit request from Hajj 3, 13 April 2011[edit]

This edit request has been answered. Set the |answered= or |ans= parameter to no to reactivate your request. |

according to this the card also has a new feature set D for purevideo which is the first card to do so:

Feature Set D adds support for WebM/VP8

Hajj 3 (talk) 12:55, 13 April 2011 (UTC)

- While I am not convinced it is the first card to support 'D' (afaict, all cards with '0x1...' or at least '0x10..' support it), this would be a useful change. (Note that VP8 support is currently only a rumour, no confirmation available.)--Regression Tester (talk) 14:29, 13 April 2011 (UTC)

- So do I view this as consensus? Wifione ....... Leave a message 02:41, 14 April 2011 (UTC)

- Consensus for changing the release date and feature set to 'D'. Unfortunately, we can't give an explanation for 'D' yet, so far it is known that it contains all 'C' features.--Regression Tester (talk) 09:24, 14 April 2011 (UTC)

- I've disabled the edit-protected template for now. Please come to a consensus here about what you specifically want changed, (ie specific instructions: please change X to Y). When you've got that, please change answered back to no and someone will be along. Thanks, Woody (talk) 21:43, 15 April 2011 (UTC)

Edit request June 13 2011[edit]

This edit request has been answered. Set the |answered= or |ans= parameter to no to reactivate your request. |

Nvidia Purevideo Decoder Platinum Crack

This is in regards to Feature Set D and the Nvidia Geforce GT 520.

According to this comparison article, Feature Set D (in GT 520) is a performance enhancement over Feature Set C (in GT 430).

- http://www.anandtech.com/show/4380/discrete-htpc-gpus-shootout/10

(1) NVIDIA confirmed that the GT 430 couldn't decode 60 fps videos at 80 Mbps.

(2) For 1080p24 streams, we find that the GT 430 is unable to keep up with the real time decode frame rate requirements at 110 Mbps. For 1080p60 streams, the limit gets further reduced to somewhere between 65 and 70 Mbps. The GT 520 has no such issues.

(3) We asked NVIDIA about the changes in the new VDPAU feature set and what it meant for Windows users. They indicated that the new VPU was a faster version, also capable of decoding 4K x 2K videos. This means that the existing dual stream acceleration for 1080p videos has now been bumped up to quad stream acceleration.

Feature Set D is also in Nvidia Quadro NVS 4200M (PCI Device ID: 0x1056). This is found by comparing the first Linux driver release (270.41.03) to support Feature Set D and the more recent 275.09.04 release.

- ftp://download.nvidia.com/XFree86/Linux-x86/270.41.03/README/supportedchips.html

- ftp://download.nvidia.com/XFree86/Linux-x86/275.09.04/README/supportedchips.html

At this time, Geforce GT 520 and Quadro NVS 4200M are the only GPUs to support this feature. — Preceding unsigned comment added by 114.76.184.117 (talk • contribs)

- imo, it is sufficient to say that the GT 520 is the best-performing PureVideo hardware so far (the exact decoding ability depends heavily on the implementation, so numbers do not make much sense although 1080p60 typically does not work on older hardware). It is not yet relevant to mention D: There are no user-visible differences so far (some size limits have been lifted but that was not documented so far). --Regression Tester (talk) 14:56, 13 June 2011 (UTC)

- Not sure what is being requested here and whether there is consensus for it. Please clarify. — Martin (MSGJ · talk) 14:07, 14 June 2011 (UTC)

VDPAU feature set D[edit]

Nvidia has released documenation stating the GT 520 supports VDPAU feature set D, but also clarified that currently no VDPAU drivers support resolutions above 2k x 2k (and no API exists for MVC decoding). So while a GT 520 certainly supports resolutions higher than 2k x 2k (and is able to decode MVC hardware-accelerated), this is currently not possible with VDPAU.--Regression Tester (talk) 11:58, 4 July 2011 (UTC)

- The Windows benchmarks clearly show that the performance increase for VC-1 and MPEG-2 decoding already works on Windows and Linux drivers. Why are you so stubborn and refuse to acknowledge the fact it works already?

http://images.anandtech.com/graphs/graph4380/38171.pnghttp://images.anandtech.com/graphs/graph4380/38172.pnghttp://www.nvnews.net/vbulletin/showpost.php?p=2432786&postcount=371— Preceding unsigned comment added by 175.138.159.46 (talk) 09:32, 8 July 2011 (UTC)

- Thank you for finding the post on nvnews, it is exactly what I have been searching for! Please note that VC-1 decoding is not slower on the GTX580 (where H264 decoding is nearly exactly half as fast): http://www.nvnews.net/vbulletin/showpost.php?p=2426779&postcount=368 --Regression Tester (talk) 10:50, 8 July 2011 (UTC)

- Concerning MPEG-2: Users complained very often on the forum that H.264 decoding via PureVideo was not fast enough for their use-case. H.264 decoding has seen an enormous performance boost with VP5 that should be noted prominently in the article (as it is done). MPEG-2 HD decoding was 100+ fps before VP5, so I have problems believing that anybody will notice a (possibly) improved decoding performance (I at least do not remember anybody complaining about MPEG-1/MPEG-2 decoding performance and I have never seen a sample >60fps - the 1920p60 samples played easily on earlier PureVideo hardware when encoded in MPEG-2, but typically could not be played if they were encoded in H264). It should therefore at least not be mentioned in the same sentence as the H264 decoding improvement because this would make the impression that it is similarly important for end-users (which it certainly isn't).--Regression Tester (talk) 10:59, 8 July 2011 (UTC)

- You must be blind or or intentionally ignoring the facts, http://images.anandtech.com/graphs/graph4380/38171.png 77FPS VP4 to 141FPS VP5, thats DOUBLE the performance for VC-1 decoding. http://images.anandtech.com/graphs/graph4380/38172.png 98FPS VP4 to 189FPS VP5, thats again DOUBLE the performance for MPEG-2 decoding. I don't know what I even bother arguing with you, you're just a stubborn person that refuses to acknowledge the facts.

- Given that I use your source to prove my point, I don't think so. --Regression Tester (talk) 12:12, 12 July 2011 (UTC)

Retarded blind persons like you shouldn't be editing since you can't be objective and you're only inflating your retarded way of thinking and ego. — Preceding unsigned comment added by 175.138.158.174 (talk) 16:39, 12 July 2011 (UTC)

Quad-stream decoding[edit]

Before adding the marketing claim of 'support for quad-stream decoding' for the fifth PureVideo generation, please explain what it exactly means (decoding several H.264 streams - including more than four - at the same time is possible with earlier hardware).--Regression Tester (talk) 17:09, 19 July 2011 (UTC)

...modern video-codec hardware operate on thread-based memory-structures, allowing them to context-switch (multitask) among multiple streams, provided there is sufficient walltime and video-RAM resources. Well, that's a horrific oversimplification of the underlying hardware (and the API.) Just pretend that video frame decoding operations are chainable as 'display lists' (much like a OpenGL or Direct3D display command list. Obviously, total video decode throughput is fixed, so as you increase the load (frame-size, total video bitstream rate, macro-block complexity and total count), the decode frame-rate will degrade proportionally. Analogous to how a 3D-accelerator can render a particular 3D-scene at 1280x720 60fps, the same scene at 640x720 ~120fps, 1920x1080 ~26.67fps.

Purevideo4 is designed to handle realtime decoding of dual-stream HighProfile @ Level 4.2 (1920x1080p30 x 2.) The underlying architectural are comparable (but not identical in all ways) to the following:1 video at 1080p601 MVC Bluray3D video (decoded into two independent 1080p24 streams)12 480p videos

This isn't really new ... even the old MPEG-2 decoder chipsets (late 1990s) that were used in set-top box appliances offered this feature. The hardware supported realtime decoding of 1 HD (1080i30) video-stream, or 6 SD video-streams (480i). Chipset architects could offer this by calculating the minimum required throughput for every major portion of the decoding pipeline: (VLC rate, IDCT macroblock rate, motion-comp pixel-rate, etc.)

On a modern Windows PC with Internet Explorer (or any browser that supports Flash 10.2 or later), you can open up multiple tabs, and play accelerated video in each of them simultaneously. — Preceding unsigned comment added by 99.109.197.169 (talk) 04:09, 2 November 2011 (UTC)

VDPAU size restrictions[edit]

It was claimed here that the VDPAU documentation - that explains that the maximum horizontal resolution is 2048 no matter which hardware generation is used - is wrong and that VDPAU feature set D does support higher horizontal resolutions for H264 (4k). I was able to test VDPAU feature set D hardware and using hardware-accelerated H264 decoding, it does not support horizontal resolutions above 2048 (this is a driver limitation). Please do not change the article in this respect until a fixed driver is released.--Regression Tester (talk) 08:38, 2 August 2011 (UTC)

Recent edits[edit]

I have reverted recent edits which do not look very constructive, at least they removed some facts about Purevideo:

- MVC is supported on some Purevideo hardware but cannot be used from VDPAU. It was never claimed anywhere that VDPAU supports MVC afaict.

- VDPAU limits the resolution to 2048x2048 even on newest Nvidia hardware, see above (and in the article) for references.

- From a users perspective, there is no difference between Nvidia VDPAU feature sets C and D. They are of course (very) different hardware, but offer the same abilities. Also see the same references as above.

Please do not change these parts of the article again without offering any sources or explanation.--Regression Tester (talk) 09:32, 5 November 2012 (UTC)

Mods here have their heads in their ass and remove good info[edit]

I add new GeForce 700 GPUs to the tables and you retarded mods remove my additions, no wonder this crap site is dying. Curse the day all you retarded admins and mods were ever born.

VP or PV[edit]

For some reason PureVideo is abbreviated to VP and not PV? This should at least be clarified and preferably explained in the WP article.PizzaMan (♨♨) 22:07, 26 June 2015 (UTC)

PureVideo is Nvidia's hardware SIP core that performs video decoding. PureVideo is integrated into some of the Nvidia GPUs, and it supports hardware decoding of multiple video codec standards: MPEG-2, VC-1, H.264, and HEVC. PureVideo occupies a considerable amount of a GPU's die area and should not be confused with Nvidia NVENC.[1] In addition to video decoding on chip, PureVideo offers features such as edge enhancement, noise reduction, deinterlacing, dynamic contrast enhancement and color enhancement.

- 1Operating system support

- 2PureVideo HD

- 2.13Nvidia VDPAU Feature Sets

- 3See also

Operating system support[edit]

The PureVideo SIP core needs to be supported by the device driver, which provides one or more interfaces such as NVDEC, VDPAU, VAAPI or DXVA. One of these interfaces is then used by end-user software, for example VLC media player or GStreamer, to access the PureVideo hardware and make use of it.

Nvidia's proprietary device driver is available for multiple operating systems and support for PureVideo has been added to it. Additionally, a free device driver is available, which also supports the PureVideo hardware.

Linux[edit]

Support for PureVideo has been available in Nvidia's proprietary driver version 180 since October 2008 through VDPAU.[2] Since April 2013[citation needed]nouveau also supports PureVideo hardware and provides access to it through VDPAU and partly through XvMC.[3]

Microsoft Windows[edit]

Microsoft'sWindows Media Player, Windows Media Center and modern video players support PureVideo. Nvidia also sells PureVideo decoder software which can be used with media players which use DirectShow. Systems with dual GPU's either need to configure the codec or run the application on the Nvidia GPU to utilize PureVideo. Media players which use LAV, ffdshow or Microsoft Media Foundation codecs are able to utilize PureVideo capabilities.

OS X[edit]

OS X was sold with Nvidia hardware, so support is probably available.[citation needed]

PureVideo HD[edit]

PureVideo HD (see 'naming confusions' below) is a label which identifies Nvidia graphics boards certified for HD DVD and Blu-ray Disc playback, to comply with the requirements for playing Blu-ray/HD DVDs on PC:

- End-to-end encryption (HDCP) for digital-displays (DVI-D/HDMI)

- Realtime decoding of H.264 high-profile L4.1, VC-1 Advanced Profile L3, and MPEG-2 MP@HL (1080p30) decoding @ 40 Mbit/s

- Realtime dual-video stream decoding for HD DVD/Blu-ray Picture-in-Picture (primary video @ 1080p, secondary video @ 480p)

The first generation PureVideo HD[edit]

The original PureVideo engine was introduced with the GeForce 6 series. Based on the GeForce FX's video-engine (VPE), PureVideo re-used the MPEG-1/MPEG-2 decoding pipeline, and improved the quality of deinterlacing and overlay-resizing. Compatibility with DirectX 9's VMR9 renderer was also improved. Other VPE features, such as the MPEG-1/MPEG-2 decoding pipeline were left unchanged. Nvidia's press material cited hardware acceleration for VC-1 and H.264 video, but these features were not present at launch.

Starting with the release of the GeForce 6600, PureVideo added hardware acceleration for VC-1 and H.264 video, though the level of acceleration is limited when benchmarked side by side with MPEG-2 video. VPE (and PureVideo) offloads the MPEG-2 pipeline starting from the inverse discrete cosine transform leaving the CPU to perform the initial run-length decoding, variable-length decoding, and inverse quantization;[4] whereas first-generation PureVideo offered limited VC-1 assistance (motion compensation and post processing).

The first generation PureVideo HD is sometimes called 'PureVideo HD 1' or VP1, although this is not an official Nvidia designation.

The second generation PureVideo HD[edit]

Starting with the G84/G86 GPUs (Tesla (microarchitecture)) (sold as the GeForce 8400/8500/8600 series), Nvidia substantially re-designed the H.264 decoding block inside its GPUs. The second generation PureVideo HD added a dedicated bitstream processor (BSP) and enhanced video processor, which enabled the GPU to completely offload the H.264-decoding pipeline. VC-1 acceleration was also improved, with PureVideo HD now able to offload more of VC-1-decoding pipeline's backend (inverse discrete cosine transform (iDCT) and motion compensation stages). The frontend (bitstream) pipeline is still decoded by the host CPU.[5][6]The second generation PureVideo HD enabled mainstream PCs to play HD DVD and Blu-ray movies, as the majority of the processing-intenstive video-decoding was now offloaded to the GPU.

The second generation PureVideo HD is sometimes called 'PureVideo HD 2' or VP2, although this is not an official Nvidia designation. It corresponds to Nvidia Feature Set A (or 'VDPAU Feature Set A').

This is the earliest generation that Adobe Flash Player supports for hardware acceleration of H.264 video on Windows.

The third generation PureVideo HD[edit]

This implementation of PureVideo HD, VP3 added entropy hardware to offload VC-1 bitstream decoding with the G98 GPU (sold as GeForce 8400GS),[7] as well as additional minor enhancements for the MPEG-2 decoding block. The functionality of the H.264-decoding pipeline was left unchanged. In essence, VP3 offers complete hardware-decoding for all 3 video codecs of the Blu-ray Disc format: MPEG-2, VC-1, and H.264.

All third generation PureVideo hardware (G98, MCP77, MCP78, MCP79MX, MCP7A) cannot decode H.264 for the following horizontal resolutions: 769–784, 849–864, 929–944, 1009–1024, 1793–1808, 1873–1888, 1953–1968 and 2033–2048 pixels.[8]

The third generation PureVideo HD is sometimes called 'PureVideo HD 3' or VP3, although this is not an official Nvidia designation. It corresponds to Nvidia Feature Set B (or 'VDPAU Feature Set B').

The fourth generation PureVideo HD[edit]

This implementation of PureVideo HD, VP4 added hardware to offload MPEG-4 Advanced Simple Profile (the compression format implemented by original DivX and Xvid) bitstream decoding with the GT215, GT216 and GT218 GPUs (sold as GeForce GT 240, GeForce GT 220 and GeForce 210/G210, respectively).[9] The H.264-decoder no longer suffers the framesize restrictions of VP3, and adds hardware-acceleration for MVC, a H.264 extension used on 3D Blu-ray discs. MVC acceleration is OS dependent: it is fully supported in Microsoft Windows through the Microsoft DXVA and Nvidia CUDA APIs, but is not supported through Nvidia's VDPAU API.

The fourth generation PureVideo HD is sometimes called 'PureVideo HD 4' or VP4, although this is not an official Nvidia designation. It corresponds to Nvidia Feature Set C (or 'VDPAU Feature Set C').

The fifth generation PureVideo HD[edit]

The fifth generation of PureVideo HD, introduced with the GeForce GT 520 (Fermi (microarchitecture)) and also included in the Nvidia GeForce 600/700 (Kepler (microarchitecture)) series GPUs has significantly improved performance when decoding H.264.[10]It is also capable of decoding 2160p4KUltra-High Definition (UHD) resolution videos at 3840 × 2160 pixels (doubling the 1080p Full High Definition standard in both the vertical and horizontal dimensions) and, depending on the driver and the used codec, higher resolutions of up to 4032 × 4080 pixels.

The fifth generation PureVideo HD is sometimes called 'PureVideo HD 5' or 'VP5', although this is not an official Nvidia designation. This generation of PureVideo HD corresponds to Nvidia Feature Set D (or 'VDPAU Feature Set D').

The sixth generation PureVideo HD[edit]

The sixth generation of PureVideo HD, introduced with the Maxwell (microarchitecture), e.g. in the GeForce GTX 750/GTX 750 Ti (GM107) and also included in the Nvidia GeForce 900 (Maxwell) series GPUs has significantly improved performance when decoding H.264 and MPEG-2.It is also capable of decoding Digital Cinema Initiatives (DCI) 4K resolution videos at 4096 × 2160 pixels and, depending on the driver and the used codec, higher resolutions of up to 4096 × 4096 pixels.GPUs with Feature Set E support an enhanced error concealment mode which provides more robust error handling when decoding corrupted video streams.

The sixth generation PureVideo HD is sometimes called 'PureVideo HD 6' or 'VP6', although this is not an official Nvidia designation. This generation of PureVideo HD corresponds to Nvidia Feature Set E (or 'VDPAU Feature Set E').

The seventh generation PureVideo HD[edit]

The seventh generation of PureVideo HD, introduced with the GeForce GTX 960 and GTX 950, a second generation Maxwell (microarchitecture) GPU (GM206), adds full hardware-decode of H.265 Version 1 (Main and Main 10 profiles) to the GPU's video-engine. Feature Set F hardware decoder also supports full fixed function VP9 (video codec) hardware decoding.

Previous Maxwell GPUs implemented HEVC playback using a hybrid decoding solution, which involved both the host-CPU and the GPU's GPGPU array. The hybrid implementation is significantly slower than the dedicated hardware in VP7's video-engine.

The seventh generation PureVideo HD is sometimes called 'PureVideo HD 7' or 'VP7', although this is not an official Nvidia designation. This generation of PureVideo HD corresponds to Nvidia Feature Set F (or 'VDPAU Feature Set F').

The eighth generation PureVideo HD[edit]

The eighth generation of PureVideo HD, introduced with the GeForce GTX 1080, GTX 1070, GTX 1060, GTX 1050 Ti & GTX 1050, GT 1030, a Pascal (microarchitecture) GPU, adds full hardware-decode of HEVC Main12 profile to the GPU's video-engine.

Previous Maxwell GM200/GM204 GPUs implemented HEVC playback using a hybrid decoding solution, which involved both the host-CPU and the GPU's GPGPU array. The hybrid implementation is significantly slower than the dedicated hardware in VP8's video-engine.

The eighth generation PureVideo HD is sometimes called 'PureVideo HD 8' or 'VP8', although this is not an official Nvidia designation. This generation of PureVideo HD corresponds to Nvidia Feature Set H (or 'VDPAU Feature Set H').

The ninth generation PureVideo HD[edit]

The ninth generation of PureVideo HD, introduced with the NVIDIA TITAN V, a Volta (microarchitecture) GPU.

The ninth generation PureVideo HD is sometimes called 'PureVideo HD 9' or 'VP9', although this is not an official Nvidia designation. This generation of PureVideo HD corresponds to Nvidia Feature Set I (or 'VDPAU Feature Set I').

The tenth generation PureVideo HD[edit]

The tenth generation of PureVideo HD, introduced with the NVIDIA GeForce RTX 2080 Ti, RTX 2080, RTX 2070, RTX 2060, GTX 1660 Ti, GTX 1660 & GTX 1650, a Turing (microarchitecture) GPU.

The tenth generation PureVideo HD is sometimes called 'PureVideo HD 10' or 'VP10', although this is not an official Nvidia designation. This generation of PureVideo HD corresponds to Nvidia Feature Set J (or 'VDPAU Feature Set J').

Naming confusion[edit]

Because the introduction and subsequent rollout of PureVideo technology was not synchronized with Nvidia's GPU release schedule, the exact capabilities of PureVideo technology and their supported Nvidia GPUs led to a considerable customer confusion. The first generation PureVideo GPUs (GeForce 6 series) spanned a wide range of capabilities. On the low-end of GeForce 6 series (6200), PureVideo was limited to standard-definition content (720×576). The mainstream and high-end of the GeForce 6 series was split between older products (6800 GT) which did not accelerate H.264/VC-1 at all, and newer products (6600 GT) with added VC-1/H.264 offloading capability.

In 2006, PureVideo HD was formally introduced with the launch of the GeForce 7900, which had the first generation PureVideo HD. In 2007, when the second generation PureVideo HD (VP2) hardware launched with the Geforce 8500 GT/8600 GT/8600 GTS, Nvidia expanded Purevideo HD to include both the first generation (retroactively called 'PureVideo HD 1' or VP1) GPUs (Geforce 7900/8800 GTX) and newer VP2 GPUs. This led to a confusing product portfolio containing GPUs from two distinctly different generational capabilities: the newer VP2 based cores (Geforce 8500 GT/8600 GT/8600 GTS/8800 GT) and other older PureVideo HD 1 based cores (Geforce 7900/G80).

Nvidia claims that all GPUs carrying the PureVideo HD label fully support Blu-ray/HD DVD playback with the proper system components. For H.264/AVC content, VP1 offers markedly inferior acceleration compared to newer GPUs, placing a much greater burden on the host CPU. However, a sufficiently fast host CPU can play Blu-ray without any hardware assistance whatsoever.

Table of GPUs containing a PureVideo SIP block[edit]

| Graphic card brand name | GPU chip code name | PureVideo HD | VDPAU feature set | First Release Date | Notes |

|---|---|---|---|---|---|

| GeForce 6 series | NV4x | VP1 | Not Supported | NV40-based models of the 6800 do not accelerate VC-1/H.264 | |

| GeForce 7 series | G7x | VP1 | Not Supported | - | |

| GeForce 8800 Ultra, 8800 GTX, 8800 GTS (320/640 MB) | G80 | VP1 | Not Supported | November 2006 | - |

| GeForce 8400 GS, 8500 GT | G86 | VP2 | A | April 2007 | - |

| GeForce 8600 GT, 8600 GTS | G84 | VP2 | A | April 2007 | - |

| GeForce 8800 GS, 8800 GT, 8800 GTS (512 MB/1 GB), 9600 GSO, 9800 GT, 9800 GTX, 9800 GTX+, 9800 GX2, GTS 240 (OEM) | G92 | VP2 | A | October 2007 | - |

| GeForce 8400 GS Rev. 2 | G98 | VP3[11] | B | December 2007 | Earlier cards use G86 core type without VP3 support |

| GeForce 8200, 8300 | C77 | VP3 | B | January 2008 | Not suitable for running CUDA |

| GeForce 9600 GSO 512, 9600 GT | G94 | VP2 | A | February 2008 | - |

| GeForce 9600M GT | G96 | VP3[12] | A[13] | June 2008 | - |

| GeForce GTX 260, GTX 275, GTX 280, GTX 285, GTX 295 | GT200 | VP2 | A | June 2008 | - |

| GeForce 9400 GT, 9500 GT | G96 | VP2[14] | A | July 2008 | - |

| GeForce 9300M GS, 9300 GS, 9300 GE | G98 | VP3[11] | B | October 2008 | Mostly found in laptops and on motherboards |

| GeForce 205, 210/G210, 310, G210M, 305M, 310M, 8400 GS Rev. 3[15] | GT218 | VP4[9] | C | October 2009 (April 2009 for the 8400 GS Rev. 3[15]) | Introduced decoding of MPEG-4 (Advanced) Simple Profile (Divx/Xvid) |

| GeForce GT 220, 315, GT 230M, GT 240M, GT 325M, GT 330M | GT216 | VP4[9] | C | October 2009 | - |

| GeForce GT 240, GT 320, GT 340, GTS 250M, GTS 260M, GT 335M, GTS 350M, GTS 360M | GT215 | VP4 | C | November 2009 | - |

| GeForce GTX 465, GTX 470, GTX 480, GTX 480M | GF100 | VP4 | C | March 2010 | - |

| GeForce GTX 460, GTX 470M, GTX 485M | GF104 | VP4 | C | July 2010 | - |

| GeForce GT 420 OEM, GT 430, GT 440, GT 620 (non-OEM), GT 630 (40 nm), GT 730 (DDR3), GT 415M, GT 420M, GT 425M, GT 435M, GT525M, GT 540M, GT 550M | GF108 | VP4 | C | September 2010 | - |

| GeForce GTS 450, GT 445M, GTX 460M, GT 555M | GF106 | VP4 | C | September 2010 | - |

| GeForce GTX 570, GTX 580, GTX 590 | GF110 | VP4 | C | November 2010 | - |

| GeForce GTX 560 Ti, GTX 570M, GTX 580M, GT 645 | GF114 | VP4 | C | January 2011 | - |

| GeForce GTX 550 Ti, GTX 560M, GT 640 (OEM) | GF116 | VP4 | C | March 2011 | - |

| GeForce 410M, GT 520MX, 510, GT 520, GT 610, GT 620 (OEM) | GF119 | VP5 | D | April 2011 | Introduced 4K UHD video decoding |

| GeForce GT 620M, GT 625M, GT 710M, GT 720M, GT 820M | GF117 | VP5 | D | April 2011 | - |

| GeForce GT 630 (28 nm), GT 640 (non-OEM), GTX 650, GT 730 (OEM), GT 640M, GT 645M, GT 650M, GTX 660M, GT 740M, GT 745M, GT 750M, GT 755M | GK107 | VP5 | D | March 2012 | - |

| GeForce GTX 660 (OEM), GTX 660 Ti, GTX 670, GTX 680, GTX 690, GTX 760, GTX 760 Ti, GTX 770, GTX 680M, GTX 680MX, GTX 775M, GTX 780M, GTX 860M, GTX 870M, GTX 880M | GK104 | VP5 | D | March 2012 | - |

| GeForce GTX 650 Ti, GTX 660, GTX 670MX, GTX 675MX, GTX 760M, GTX 765M, GTX 770M | GK106 | VP5 | D | September 2012 | - |

| GeForce GTX 780, GTX 780 Ti, GTX TITAN, GTX TITAN BLACK, GTX TITAN Z | GK110 | VP5 | D | February 2013 | - |

| GeForce GT 630 rev. 2, GT 635, GT 640 rev. 2, GT 710, GT 720, GT 730 (GDDR5), GT 730M, GT 735M, GT 740M | GK208 | VP5 | D | April 2013 | - |

| GeForce GTX 745, GTX 750, GTX 750 Ti, GTX 850M, GTX 860M, 945M, GTX950M, GTX960M | GM107 | VP6 | E | February 2014 | Introduced DCI 4K video decoding |

| GeForce 830M, 840M, 920MX, 930M, 930MX, 940M, 940MX, MX110, MX130 | GM108 | VP6 | E | March 2014 | - |

| GeForce GTX 970, GTX 980, GTX 970M, GTX 980M | GM204 | VP6 | E | September 2014 | - |

| GeForce GTX 750 SE, GTX 950, GTX 960 | GM206 | VP7 | F | January 2015 | Introduced dedicated HEVC video decoding (Main and Main 10) & dedicated VP9 video decoding |

| GeForce GTX TITAN X, GeForce GTX 980 Ti | GM200 | VP6 | E | March 2015 | |

| GeForce GTX 1070, GTX 1070 Ti, GTX 1080 | GP104 | VP8 | H | May 2016 | Introduced dedicated HEVC video decoding (Main12) |

| GeForce GTX 1060 | GP106 | VP8 | H | July 2016 | |

| NVIDIA TITAN Xp, TITAN X, GeForce GTX 1080 Ti | GP102 | VP8 | H | August 2016 | |

| GeForce GTX 1050, GTX 1050 Ti | GP107 | VP8 | H | October 2016 | |

| GeForce GT 1030, MX150 | GP108 | VP8 | H | May 2017 | |

| Tesla V100-SXM2, V100-PCIE, NVIDIA TITAN V, Quadro GV100 | GV100 | VP9 | I | November 2017 | |

| NVIDIA TITAN RTX, GeForce RTX 2080 Ti | TU102 | VP10 | J | September 2018 | Introduced dedicated HEVC video decoding (Main 4:4:4 12) |

| GeForce RTX 2080 Super, RTX 2080, RTX 2070 Super | TU104 | VP10 | J | September 2018 | Introduced dedicated HEVC video decoding (Main 4:4:4 12) |

| GeForce RTX 2060, RTX 2060 Super, RTX 2070 | TU106 | VP10 | J | October 2018 | Introduced dedicated HEVC video decoding (Main 4:4:4 12) |

| GeForce GTX 1660, GTX 1660 Ti | TU116 | VP10 | J | February 2019 | Introduced dedicated HEVC video decoding (Main 4:4:4 12) |

| GeForce GTX 1650 | TU117 | VP10 | J | April 2019 | Introduced dedicated HEVC video decoding (Main 4:4:4 12) |

| Ion, Ion-LE (first-generation Ion)[16] | C79 | VP3 | B | - | |

| Ion 2 (next-generation Ion) | GT218 | VP4 | C | - |

Nvidia VDPAU Feature Sets[edit]

Nvidia VDPAU Feature Sets[17] are different hardware generations of Nvidia GPU's supporting different levels of hardware decoding capabilities. For feature sets A, B and C, the maximum video width and height are 2048 pixels, minimum width and height 48 pixels, and all codecs are currently limited to a maximum of 8192 macroblocks (8190 for VC-1/WMV9).Partial acceleration means that VLD (bitstream) decoding is performed on the CPU, with the GPU only performing IDCT, motion compensation and deblocking. Complete acceleration means that the GPU performs all of VLD, IDCT, motion compensation and deblocking.

Feature Set A[edit]

- Supports complete acceleration for H.264 and partial acceleration for MPEG-1, MPEG-2, VC-1/WMV9

Feature Set B[edit]

- Supports complete acceleration for MPEG-1, MPEG-2, VC-1/WMV9 and H.264.

- Note that all Feature Set B hardware cannot decode H.264 for the following widths: 769-784, 849-864, 929-944, 1009-1024, 1793-1808, 1873-1888, 1953-1968, 2033-2048 pixels.

Feature Set C[edit]

- Supports complete acceleration for MPEG-1, MPEG-2, MPEG-4 Part 2 (a.k.a. MPEG-4 ASP), VC-1/WMV9 and H.264.

- Global motion compensation and Data Partitioning are not supported for MPEG-4 Part 2.

Feature Set D[edit]

- Similar to feature set C but added support for decoding H.264 with a resolution of up to 4032 × 4080 and MPEG-1/MPEG-2 with a resolution of up to 4032 × 4048 pixels.

Feature Set E[edit]

- Similar to feature set D but added support for decoding H.264 with a resolution of up to 4096 × 4096 and MPEG-1/MPEG-2 with a resolution of up to 4080 × 4080 pixels. GPUs with VDPAU feature set E support an enhanced error concealment mode which provides more robust error handling when decoding corrupted video streams. Cards with this feature set use a combination of the PureVideo hardware and software running on the shader array to decode HEVC (H.265) as partial/hybrid hardware video decoding.

Feature Set F[edit]

- Introduced dedicated HEVC Main (8-bit) & Main 10 (10-bit) and VP9 hardware decoding video decoding up to 4096 × 2304 pixels resolution.

Feature Set G[edit]

- Introduced dedicated hardware video decoding of HEVC Main 12 (12-bit) up to 4096 × 2304 pixels resolution.

Feature Set H[edit]

- Feature Set H are capable of hardware-accelerated decoding of 8192x8192 (8k resolution) H.265/HEVC video streams.[18]

Feature Set I[edit]

Nvidia Purevideo Decoder Hd

Feature Set J[edit]

See also[edit]

- Unified Video Decoder, AMD's dedicated video decoding ASIC.

- DirectX Video Acceleration (DxVA) API for Microsoft Windows operating-system.

- VDPAU (Video Decode and Presentation API for Unix) from Nvidia – current Nvidia optimized media API for Linux/UNIX operating-systems

- Video Acceleration API (VA API) – an alternative video acceleration API for Linux/UNIX operating-system.

- OpenMAX IL (Open Media Acceleration Integration Layer) – a royalty-free cross-platform media abstraction API from the Khronos Group

- X-Video Motion Compensation (XvMC) API – first media API for Linux/UNIX operating-systems, now practically obsolete.

Older Nvidia video decoding hardware technologies[edit]

References[edit]

Nvidia Purevideo Decoder Platinum

- ^'NVIDIA GT200 Revealed - GeForce GTX 280 and GTX 260 Review | NVIDIA GT200 Architecture (cont'd)'. www.pcper.com. Retrieved 2016-05-10.

- ^'NVIDIA Driver Brings PureVideo Features To Linux'. Phoronix. 2008-11-14.

- ^'Nouveau Video Acceleration'. freedesktop.org.

- ^'PureVideo: Digital Home Theater Video Quality for Mainstream PCs with GeForce 6 and 7 GPUs'(PDF). NVIDIA. p. 9. Retrieved 2008-03-03.

- ^'PureVideo Support table'(PDF). NVIDIA. Retrieved 2007-09-27.

- ^'PureVideo HD Support table'(PDF). NVIDIA. Retrieved 2008-10-28.

- ^'G98 first review'. Expreview. Retrieved 2008-12-04.

- ^'Implementation limits VDPAU decoder'. Download.nvidia.com. 1970-01-01. Retrieved 2013-09-10.

- ^ abc'NVIDIA's GeForce GT 220: 40nm and DX10.1 for the Low-End'. AnandTech. Retrieved 2013-09-10.

- ^'AnandTech Portal | Discrete HTPC GPU Shootout'. Anandtech.com. Retrieved 2013-09-10.

- ^ ab'nV News Forums - View Single Post - VDPAU capablilities and generations?'. Nvnews.net. Archived from the original on 2013-05-22. Retrieved 2013-09-10.

- ^'NVIDIA GeForce 9600M GT - NotebookCheck.net Tech'. Notebookcheck.net. 2013-01-16. Retrieved 2013-09-10.

- ^'Appendix A. Supported NVIDIA GPU Products'. Us.download.nvidia.com. 2005-09-01. Retrieved 2013-09-10.

- ^http://forums.nvidia.com/index.php?showtopic=74108

- ^ abGeForce 8 Series#Technical summary

- ^'Specifications'. NVIDIA. Retrieved 2013-09-10.

- ^'Appendix G. VDPAU Support'. Http.download.nvidia.com. 2019-06-10. Retrieved 2019-06-10.

- ^http://www.nvidia.com/download/driverResults.aspx/104284/en-us Nvidia LINUX X64 (AMD64/EM64T) DISPLAY DRIVER Version: 367.27